Difference between revisions of "Agents"

(→Agents definition) |

(→Agents definition) |

||

| Line 8: | Line 8: | ||

Another, more precise definition that extends the one above is from P. Maess: | Another, more precise definition that extends the one above is from P. Maess: | ||

| − | '''''“Autonomous agents are computational systems that inhabit some complex dynamic environment, sense and act autonomously in this environment, and by doing so realize a set of goals or tasks for which they are deigned”''''' <ref name="tech"> | + | '''''“Autonomous agents are computational systems that inhabit some complex dynamic environment, sense and act autonomously in this environment, and by doing so realize a set of goals or tasks for which they are deigned”''''' <ref name="tech"/> |

The second definition specifies the kind of agents we are interested in (it talks about computational agents which belongs to the area of engineering and has nothing to with natural agents from the field of biology such as human). It also says the agent are autonomous, which means they have a control over their actions and operate without direct interventions of other entities. Last difference from previous definition is that they are acting in order to achieve some specific goals. | The second definition specifies the kind of agents we are interested in (it talks about computational agents which belongs to the area of engineering and has nothing to with natural agents from the field of biology such as human). It also says the agent are autonomous, which means they have a control over their actions and operate without direct interventions of other entities. Last difference from previous definition is that they are acting in order to achieve some specific goals. | ||

| − | [[File:Agent_hram00.png|right|500px]] <ref name="tech"> | + | [[File:Agent_hram00.png|right|500px]] <ref name="tech"/> |

An example of an agent according to a previous description is a thermostat. A simple autonomous device which is supposed to maintain temperature in a room. Maintaining the temperature is its goal. It contains a sensor to check what an actual temperature in the room is, a memory to remember what the desired temperature is and the values from the sensor and a decision-making function which compares the measured temperature with the desired one (making this decision is an action) and based on the result (using domain knowledge what to do when actual temperature is lower/higher that desired temperature) commands to turn the heating or the air conditioner on/off (commanding the heating is an effector). | An example of an agent according to a previous description is a thermostat. A simple autonomous device which is supposed to maintain temperature in a room. Maintaining the temperature is its goal. It contains a sensor to check what an actual temperature in the room is, a memory to remember what the desired temperature is and the values from the sensor and a decision-making function which compares the measured temperature with the desired one (making this decision is an action) and based on the result (using domain knowledge what to do when actual temperature is lower/higher that desired temperature) commands to turn the heating or the air conditioner on/off (commanding the heating is an effector). | ||

Revision as of 23:17, 24 January 2018

Contents

Agents definition

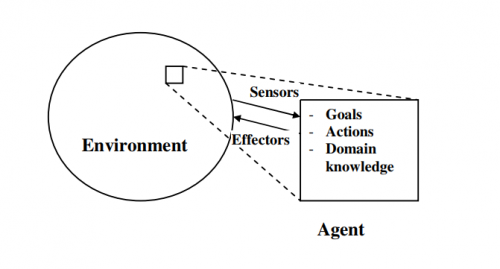

First of all, when talking about agents and multi-agent systems we should try to define what an agent is, however there is no one strict definition, a variety of different definitions exists. Some of them are quite simple, others are much more demanding. All strongly influenced by their background. A different definition will be used in area of artificial intelligence than in area of software engineering. A simple but clear and well understandable definition comes from S. Russel and P. Norvig:

“An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through effectors”.

Another, more precise definition that extends the one above is from P. Maess:

“Autonomous agents are computational systems that inhabit some complex dynamic environment, sense and act autonomously in this environment, and by doing so realize a set of goals or tasks for which they are deigned” [1]

The second definition specifies the kind of agents we are interested in (it talks about computational agents which belongs to the area of engineering and has nothing to with natural agents from the field of biology such as human). It also says the agent are autonomous, which means they have a control over their actions and operate without direct interventions of other entities. Last difference from previous definition is that they are acting in order to achieve some specific goals.

An example of an agent according to a previous description is a thermostat. A simple autonomous device which is supposed to maintain temperature in a room. Maintaining the temperature is its goal. It contains a sensor to check what an actual temperature in the room is, a memory to remember what the desired temperature is and the values from the sensor and a decision-making function which compares the measured temperature with the desired one (making this decision is an action) and based on the result (using domain knowledge what to do when actual temperature is lower/higher that desired temperature) commands to turn the heating or the air conditioner on/off (commanding the heating is an effector).

Very similar specific definition is almost twenty years old and comes from G. Weiss (1999)

“An agent is a computer system that is situated in some environment, and that is capable of autonomous action in this environment in order to meet its design objectives”

From those three definitions we can see that an agent can be almost anything but in many cases, it is more specified and only a certain kind of agents is acceptable for a certain problem.

Agents characteristics

Communication and environment perception are two basic characteristics of agents. They might look very similar but in some situations it is better to distinguish them. Agents use their senses to perform perception and thank to it they obtain unstructured information from their environment whereas during communication, two or more agents are sending and receiving structured information to and from each other. This ability is crucial for a group of agents to work as a system. It would not be possible without communication. Communication is also necessary prerequisite for more advanced interactive abilities of agents such as cooperation, coordination or competition, also called social abilities.

Next one of basic characteristics of agents is reactiveness. Thanks to previous abilities, agents can perceive or receive an information but it’s the same important that they can react to it. They can perform different actions based on a decision they made. The decision-making process can be simple but also very complicated and sophisticated, depend on specific agents. After deciding what to do, agents act towards the environment or other agents.

Another characteristic is an autonomy, which means each agent can decide solely, acts without external intervention and has control over its actions. When they are supposed to act autonomously, they need to have some goals that they try to achieve to be able to make decisions. We say, they are goal-oriented.

Last characteristic mentioned here is initiative. Agents do not only act when they are asked to but also autonomously on their own initiative to fulfil their objectives.

Another author[1] names similar list of agent’s properties, claiming these are the most frequently mentioned ones:

| Property | Meaning |

|---|---|

| Reactive | Responds in a timely fashion to changes in the environment |

| Autonomous | Exercises control over its own actions |

| Goal-oriented | Does not simply act in response to the environment |

| Temporally continuous | Is a continuously running process |

| Communicative | Communicates with other agents |

| Learning | Changes its behavior based on its previous experience |

| Mobile | Able to transport itself from one machine to another (this is associated manly with software agents) |

| Flexible | Actions are not scripted |

Note: Some of the properties mentioned above applies only for some kinds of agents, not all of them.

Categorization of agents

As well as for the definition even for categorization of agents there are many authors and many different taxonomies. According to the first definition, agent are entities that senses their environment and acting over it. They can be physical, virtual or a combination of both. Base on their physical substance we can divide artificial and natural agents.

Artificial agents: there are different kind of artificial agents, we can divide them in a following way:

• Physical agents are tangible entities existing in a real world. As an example, you can imagine some sensors or any electronic devices. However, to their proper function they are nowadays often combined with a virtual agent – a software.

• Virtual agents also called software agents are pieces of code that performs a certain function. An example of a virtual agent can be a computer virus.

Natural agents: humans, animals, bacteria or viruses can be considered as natural agents. Most often we can come across this term in biology.

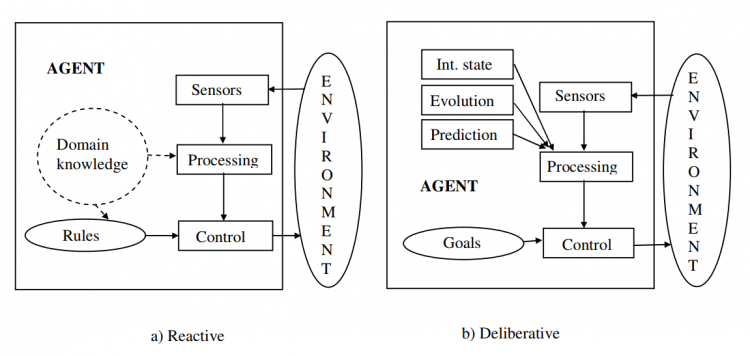

Many authors use distribution of agents based on their way of reasoning for deliberative, reactive and hybrid agents.

Reactive agents

Also called behaviour-based agents or purely reactive agents. They do not use artificial intelligence for reasoning about their environment. In contrast with deliberative agents, reactive ones do not create any models to plan their next actions but simply react to a current perception. They are far simpler than deliberative agent but that does not make them less important. Thanks to their simplicity, its easier, faster and cheaper to develop them and to find and fix any problems if occurs.

Let’s talk a bit about reactive agents’ architecture. They consist of behaviours – decentralized competence modules organized into a global structure. We often do not distinguish functional units such as sensors or decision-making units but the input from sensor it straight connected to a behaviour that produces an output and together it creates one module.

One of the most used architectures in reactive agents is subsumption architecture by Brooks which is based on using several layers, each describing some action and a condition leading to this action. This architecture has two key assumption:

• Situatedness and embodiment: It is important for agents to be physically present in the environment and get all the information from sensing the environment or receiving from other agents

• Intelligence and emergence: There is no intelligence of agents themselves, it all comes from their interaction. That is why reactive agents mostly exist as a system, not solely.

The agents sense, check the condition and perform the action. Each layer has its sensors and effectors and looks following: If condition then action. The lower layer, the higher priority. Let’s look at how Brooks described the layers:

0. Avoid objects

1. Wander

2. Explore

3. Build maps

4. Monitor changes

5. Identify objects

6. Plan changes to the world

7. Reason about behaviour of objects [2]

The lower layer (layer 0.) has the highest priority, which means, the most important thing for the agent is to avoid any obstacles. When there is no obstacle the agent can wander around the world without crashing any objects and so on. The first matching rule applies.

Another architectonic approach is agent network architecture by Maes. The behaviours are not organized in layer but in networks of interconnected modules. Two types of modules are distinguished – action modules (to perform certain action) and belief modules (to hold agent’s attitudes). The functions are driven by signals from sensors and goals represented in belief modules. Similarities with neural networks can be found in this approach.

To sum it up, reactive agents are quite simple, have no artificial intelligence and perform real reasoning, only react to the perceptions, create no models of the environment in their memory, needs to be present in the environment, their intelligence comes from their mutual interaction.

Deliberative agents

Deliberative agents, also called intelligent agents or rational agents, are agents with their own intelligent behaviour coming from their artificial intelligence. They work with a symbolic representation of an external world as they create a model of an external world in their memory and they can use this model for further planning. Deliberative agents do not necessarily have only one goal, they can pursue very complex goal, long-term goals or multiple goals in a hierarchy, so the decision-making process is complicated by choosing the most appropriate goal for a certain situation.

Some goals cannot be achieved by the agent itself, it needs to cooperate with other agents who can but do not have to accept its request if it does not fit with their objectives. The agents do not stay on the same level of knowledge all the time, they use their memory and learn from their experiences and experiences of other agent, they can develop their skills.

A disadvantage of deliberative agents is their complexity. It is not easy to develop and maintain such agent, bugs can occur and might be difficult to fix. They are also very demanding for computational power.

From outside, behaviour of deliberative agent looks very similar to reactive agents. They sense their environment, they evaluate the best response and perform appropriate action. But they do much more then that and the interesting and complicated part is what happens inside. As there was already mentioned, agent uses its memory, where there is a storage of some basic knowledge and everything what the agent learned from past experience, agent’s state and agent’s goals.

At the beginning there is an input function that transform the perceptions and received information (from simple to complex ones such as human speech) into symbolic representation that the agent understands = input. Based on the input the internal state of agent is changed. At last the action appropriate to a current state is performed. This is the basic architecture of deliberative agents, but their design might differ. Let’s show some approaches to the design, that are used.

Utility-based approach: this approach uses utility to decide what to do and what not to do, what to do first and what to do later. Utility can be cardinal (each option has assigned a real number, this way is quite easy to use) or ordinal (ranks options from the one with highest utility to the one with lowest, does not so how much lower the utility of an option is comparing to the utility of the previous one). Utility-based approach comes useful in environment where the result is partly random and partly depend on agent’s decision. It counts with an average utility of states coming from specific action considering their probability. A possible problem is that the utility from certain option is subjective and it is not clear how the agents assign the utility to options in real simulation. Moreover, in more complex problem, this could be very demanding for computing power.

Logic-based approach: coming from artificial intelligence, agent make logical deduction over a set of known statements. This approach has to deal several challenges. One of them is how to translate the perceptions from the environment into logical statements. There are also risks of logical deadlocks and as well as for the previous approach the calculations can be very demanding for computing power.

BDI approach: kind of extended logic-based approach, where BDI stands for belief-desire-intention. It is very sophisticated approach similar to real human reasoning. Belief is a set of knowledge, information about the environment, itself and other agents. Desires represents what the agent want to do, and intentions comes from desires but respecting feasibility. The intentions are typically realised through plans and beliefs are continuously updated.

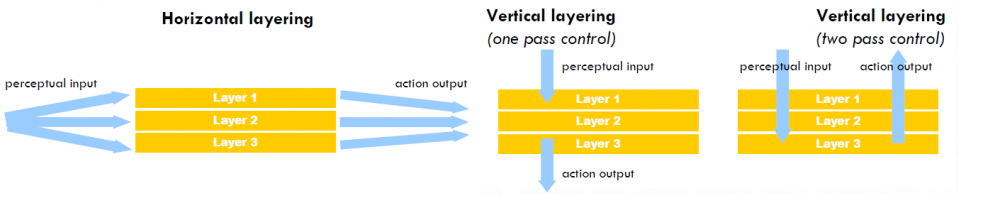

Hybrid agents

As previously mentioned agents have their pros and cons, a possible solution to reach the best result is to combine them. The hybrid agent consists of layer, at least one deliberative layer and at least one reactive layer but it can have much more layers. Each layer ha its own behaviour and together with behaviour of other layers creates overall behaviour of the agent.

There are tree options of layering: horizontal, vertical with one pass control and vertical with two pass control as shown in the following picture:[3]

Horizontal layering is simple to design but layers could compete each other, therefor they need mediator subsystem.

Vertical layering with one pass control has a simple interaction between layers but it is not too flexible, and it is vulnerable to failures. It cannot provide feedback between layers.

Vertical layering with two pass control has less interaction between layers but still very vulnerable to failures and has lower performance [4]

Resources

- ↑ 1.0 1.1 1.2 1.3 GLAVIC, Mevludin. "Agents and Multi-Agent Systems: A Short Introduction for Power Engineers.", 2006

- ↑ Brooks, R.A., "A robust layered control system for a mobile robot", IEEE Journal of Robotics and Automation, Volume 2(1), 1986

- ↑ ŠALAMON, Tomáš. Design of agent-based models: developing computer simulations for a better understanding of social processes. Řepín-Živonín: Tomáš Bruckner, 2011. ISBN 978-809-0466-111.

- ↑ ŠALAMON, Tomáš. Multi-agent systems I. 2011