Is it possible to stop fake news

Name: Is it possible to stop fake news?

Author: Tomáš Martínek (mart13) (talk)

Method: MAS Simulation

Tool: NetLogo

Problem definition

Fake news is a global problem and it is getting worse every year. Is it possible to stop fake news? Fake news are made by people named "trolls". These trolls make fake news and send them to other people. On the other side of the table are elfs, who defend people from fake news and send "fake news warnings". When people receive fake news, they have basicaly three choices: resend it as it is (basicaly become troll), resend it with "fake news warning" (become elf) or dont resend it. Can elfs beat trolls and defend the majority of system from fake news?

The goal of this simulation is to find out what must happen to defend the majority of society from fake news.

Method

Simulation is creted in NetLogo software. I chose multi agent system aproach due to decentralization and autonomy of simulated environment.

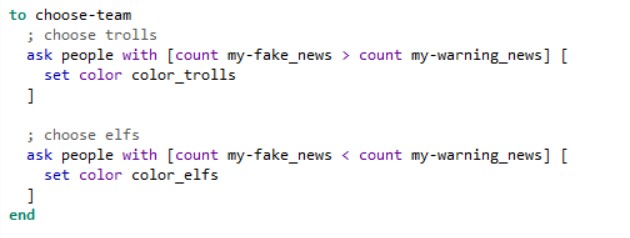

Created MAS system consists of reactive agents. They only react to a few basic rules:

- If an agent has more fake news than warning news, agent becomes a troll and starts to send fake news to other people around.

- If an agent has more warning news than fake news, agent becomes an elf and starts to send warning news to other people around.

Model

In this chapter I would like to describe my implementation of the simulation and its user interface.

Simulation

World

Fake news are mostly spread via e-mails and links via electronic messages in general. To simulate the environment of internet, I chose to create a square map without any borders on sides to simulate the unbounded space of internet. Everyone can reach anyone if they are somehow connected, in this simulation the connection is the distance of people.

Entities

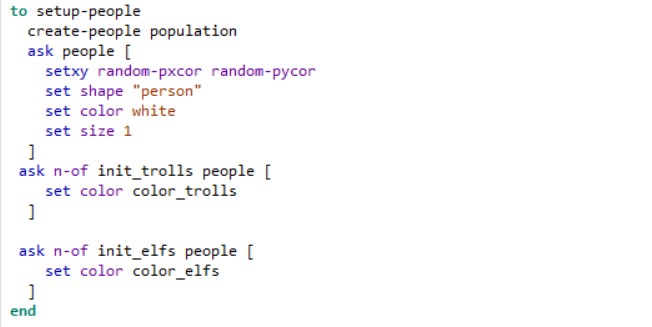

Simulation model has technically only one entity, which is people. According to their properties I divide them into three groups:

- Trolls: was initiated as a troll or has more fake news than warnings at the moment.

- Elfs: was initiated as an elf or has more warnings than fake news at the moment.

- Basic people: people without any fake news or warning news.

Every group has a different colour and ability, trolls are red and can send fake news, elfs are green and can send warning news. Basic people are white.

It is a reflection of a real world situation, where there are only people too and according to their surrounding environment (family, friends, country they live, ...), they create their beliefs and opinions on the forthcoming news.

Relationships

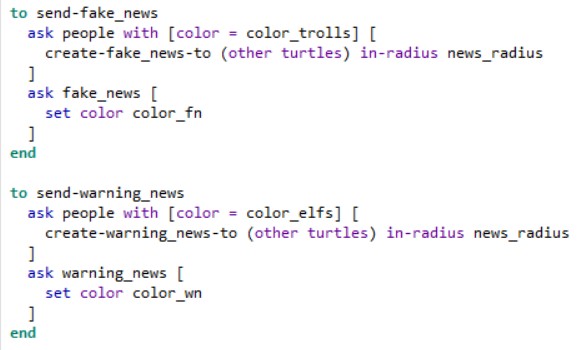

The mechanism of sending fake and warning news is made of relationships between people. The simulation consists of two different relationship:

- Fake news relationship: a vizualization of sending a fake news from trolls

- Warning news relationship: a vizualization of sending a warning news from elfs

User interface

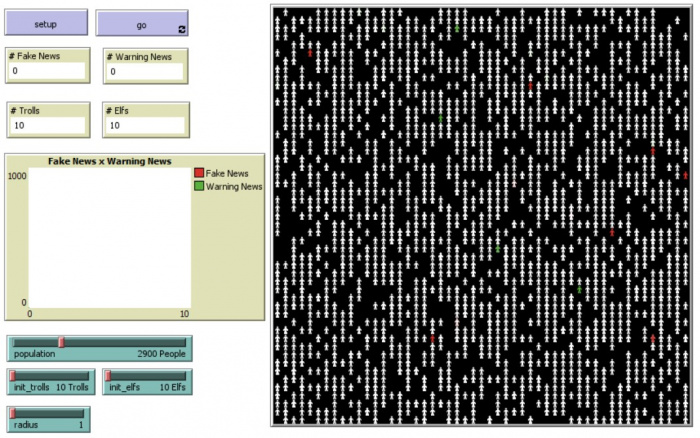

Overview

The simulation consists of a simulation window, inputs, monitors and a plot. Here is an overview and a detail description of user interface.

User inputs

User can configure the setup rules for the simulation and change the behaviour of the model. Here is a list of configurable variables:

- Number of population

- Number of Trolls initiated at the beginning

- Number of Elfs initiated at the beginning

- Radius: a reachable distance for sending messages

Monitors and plots

To visualise the process of simulation, its variables and to show the results, the simulation uses some monitors and plots:

- Monitor: Number of Fake news sent

- Monitor: Number of Warning news sent

- Monitor: Number of Elfs

- Monitor: Number of Trolls

- Plot: Number of Fake news and Warning news in one graph

Scenario of simulation

The simulation begins with a population of people and a certain number of trolls and elfs. When the simulation starts running, trolls and elfs start to spread fake or warning news. As these news are spread, the basic population of people starts to emerge into elfs and trolls themselfs. The simulation can stop, when there is no changes in emergence of population and the numbers of trolls and elfs are stable.

Results

In this chapter I would like to point out some interesting variables and behaviours of the simulation. Generally speaking in my opinion the simulation is made well and can answer the research question from beginning, is it possible to stop fake news?

Situation 1

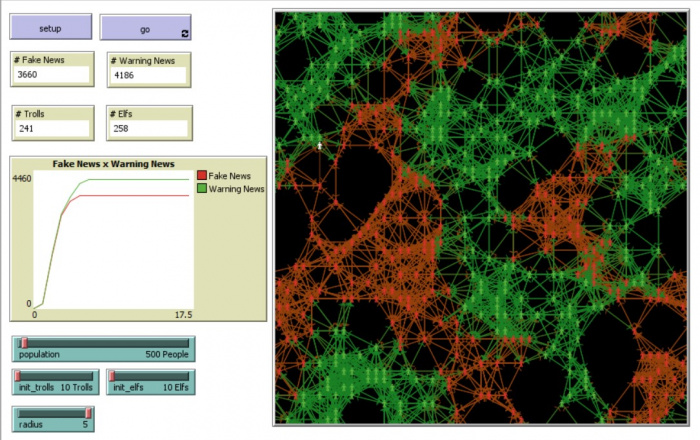

The first basic rule that can be seen in the simulation, is that if there is more elfs/trolls at the beginning, there will be more of elfs/trolls at the end. This rule is very basic and it depends on high density of people in reachable area.

Situation 2

Very interesting variable of the model is Radius. Its value is a very important factor of a whole "life" of simulation. It defines the reachable space for agents to send messages to each other. I defined the limits of this variable from 1 to 5.

If the value is 1, only couple of surrounding agents are reachable by one agent. Speed of the simulation is very slow and user can clearly see the spread of two different colours around the map. If the value is on its other extreme, 5, speed of the simulation is very intense and behaviour of the simulation is very chaotic for user.

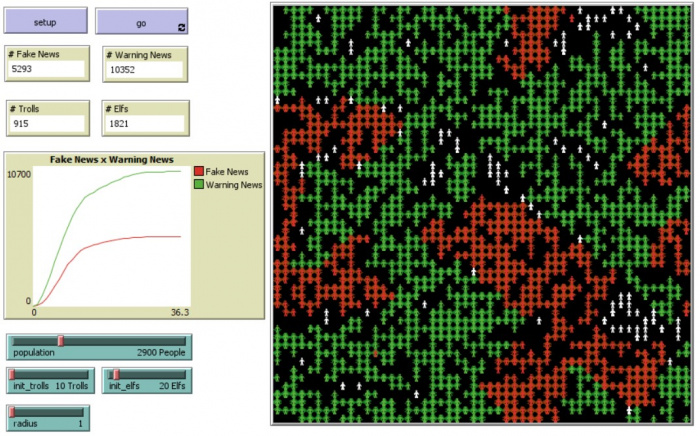

Another interesting variable is Population of people. With fixed size of the world map, it can be also interpreted as "people density." In combination with different values of variable Radius, very interesting situations can happen. Based on these values the whole system is changing from predictable to unpredictable chaos and the basic rule "if there is more trolls at the beginning, there will be more at the end" doesn't work anymore, as can be seen in the next picture.

Situation 3

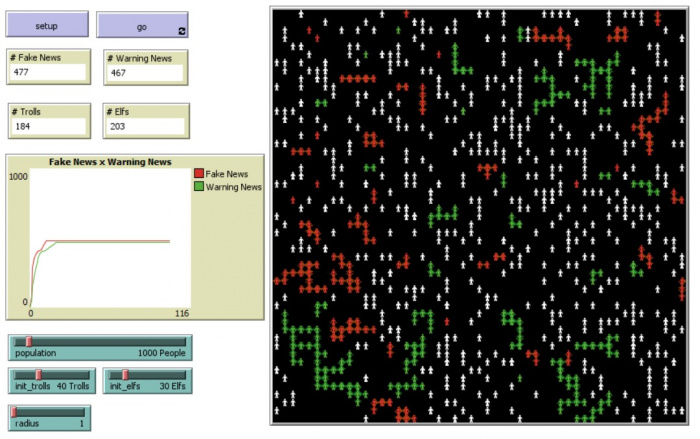

Another basic rule that can be seen in the simulation is when there is small radius and equal number of initiated elfs and trolls, situation depends on a layout of people and their density forming clusters of teams. This situation can be seen in the next picture.

Situation 4

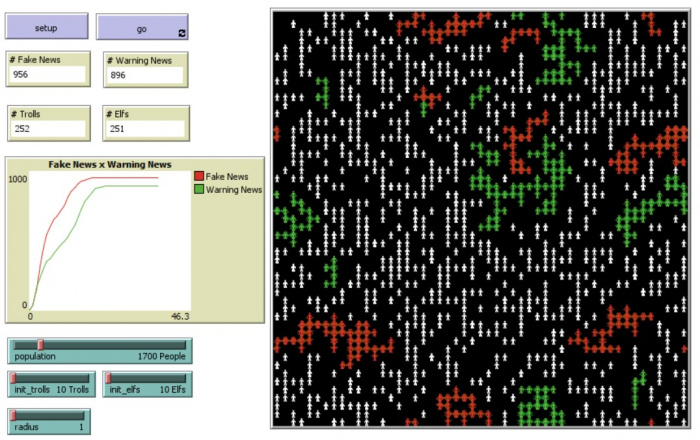

When I designed the model, I had very high hopes in a mechanism of changing teams in passing time. What I mean is those reactive rules controling the membership to team: when there is more fake news, agent is troll; when there is more warning news, agent is elf. I was very surprised to see that this mechanism didn't have any significant impact on the simulation. I hoped for emerging dynamic team changes, but this was really not the case. If there were even some changes, the numbers of changed agents was really low.

I assume that this "team changes" do not often emerge in real life situations too. When someone beliefs in something, it often needs more than one or two other people opinions to change his/her mind. And in bigger numbers, one changed mind doesn't mean much.

Conclusion

The fake news simulation is a simplificated version of a real life problem but in my opinion very descriptive. It shows the fundaments of spreading fake news and their warnings in the society.

An extended version could have for example more sophistical layout of people. Some more dense groups of people could be created with inspiration from widely known leeks of e-mail adresses from any big web services (leeks I remember were for example from Facebook, Twitter, iCloud). In that situation the key connection factor, distance of people, would remain the same.

Another option to improve the model could be a variable describing a slowly decreasing power of persuation to the message. With more distance traveled, the message would decrease its persuation power. This could be a metaphore for real phenomenon, that local news are always more persuasive.

Sources

- https://www.nytimes.com/2021/11/22/world/europe/belarus-migrants-facebook-fake-news.html

- https://semantic-visions.com/resource/defending-the-covid-19-vaccination-pipeline

- https://www.cits.ucsb.edu/fake-news/spread

- https://thenextweb.com/news/ai-isnt-going-to-stop-fake-news-syndication

- https://www.bbc.co.uk/bitesize/articles/z6kxxyc

- https://ischool.syr.edu/fake-news-why-people-believe-how-it-spreads-and-what-you-can-do-about-it/

- https://futurism.com/fake-news-study-spread